As generative AI continues to rapidly evolve, security professionals keep coming back to the same question: Is AI good or bad for cybersecurity?

The answer isn’t that simple.

Generative AI models came with promises of major threat detection improvements and other benefits for security teams, like assisting in threat modeling, providing context around signals, and more. However, AI is also helping threat actors make their scams seem more legitimate and churn out phishing emails at a faster pace.

And then there are the other, more unexpected impacts of AI advancements.

Earlier this year, for instance, researchers identified malicious machine learning models on the Hugging Face AI development platform that were using a broken Python Pickle serialization format to evade detection.

And in May, the curl project’s founder introduced a process in its bug report submissions to weed out if reporters used AI, citing frustrations with “AI slop” security reports.

In our recent June Tradecraft Tuesday, Chris Henderson, Chief Information Security Officer at Huntress, and Truman Kain, Staff Product Researcher at Huntress, talked about the good, the bad, and the ugly parts of AI. And how it’s helping (and hurting) both defenders and threat actors.

How threat actors are using AI

Attackers are already using AI in various ways, including:

- Deepfakes

- Pig butchering scams

- Text for phishing emails

- Phishing kits

- Promoting fake AI tools to spread malware

- AI-generated robocalls

Here are some of the most common ways that we see threat actors using AI in attacks.

Deepfakes: Deep trouble

Anyone browsing TikTok, X (Twitter), or other social media platforms has likely come across AI-generated deepfakes (and if you haven’t, go Google “Tom Cruise deepfake,” and thank us later). But deepfakes aren’t just silly—they’ve become scarily accurate, and threat actors are using that to their advantage.

Take the fake IT worker scam, for instance, which many threat actors—especially those linked to North Korea—have recently been using. Here, attackers use deepfakes to pretend to be legitimate IT workers during job interviews. After securing a role, the workers infiltrate organizations in order to steal sensitive data. And in some cases, even extort the employers. Many different companies, including cryptocurrency exchange Kraken, have been targeted by this type of attack.

Attackers are also using audio deepfakes to carry out spear vishing (or voice phishing) attacks. In these attacks, threat actors impersonate the voice of a trusted person to convince targets to do various things, like give sensitive information or hand over funds.

In May, for instance, the FBI warned that threat actors are pretending to be senior US officials, sending voice memos to other government officials and their contacts in order to build rapport before gaining access to their personal accounts.

Another popular—and particularly despicable—example is the “grandparent scam,” where scammers call grandparents and use a voice clip of their grandchildren to impersonate them, pretending to be in a crisis situation and asking for urgent financial assistance.

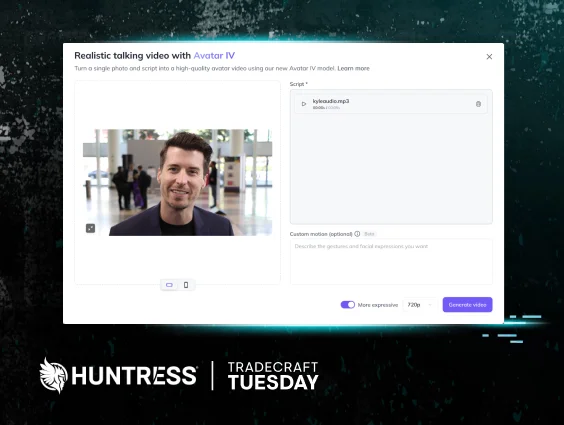

Figure 1: The creation process for a deepfake video using AI tools

The scams above mostly use audio deepfakes, but there have been some incidents that have involved both audio and video deepfakes. These types of deepfakes allow threat actors to add some level of credibility to their attack…if they can pull it off.

Last year, a finance employee at a multinational firm was invited to a video call with several staff members, including someone he thought was the CFO. But the “colleagues” were actually deepfake recreations, and the employee was tricked into transferring $25 million to scammers.

This incident is particularly interesting because it was a real-time deepfake that involved face swaps in addition to audio. These types of live deepfakes are still a challenge for threat actors because they involve capabilities that aren’t freely available to use without high-end, specialized software and hardware.

Still, the technology for creating deepfakes that aren’t live has rapidly improved, including text-to-video and text-to-audio tools. In fact, the demo video below shows just how easy it was for us to create a deepfake involving Huntress’ own CEO.

What’s truly concerning about deepfake scams? In our eyes, it’s the fact that these types of scams are turning existing verification processes upside down.

Think about when IT teams receive a password reset request via a ticket. They’d typically hop on a Zoom call with the requester as one way of verification, right? Well, the advancement of video and audio deepfakes cancels out this verification measure, while introducing further risk.

As deepfakes continue to evolve, businesses will need to rethink their existing verification processes. They’ll also need to train their employees to look for red flags—and have clear, actionable plans in place for employees who do find themselves face to face with a deepfake scam.

AI for social engineering, exploitation, and evasion

Threat actors are also using AI in attacks that involve social engineering. That includes pig butchering scams, where attackers build up the trust of their targets over time, before tricking them into fraudulent activity.

Attackers use AI to write phishing emails (especially if they don’t speak English as their first language), eliminating many of the traditional “red flags” in phishing attacks, like spelling errors or questionable grammar. We’ve also seen AI tools being integrated into phishing kits as a way for threat actors to take landing pages or emails and effectively translate them to other languages in order to scale their attacks. Overall, these capabilities could allow threat actors to send out phishing emails at a faster rate.

Here are some other ways that threat actors are using AI:

- Attackers are using social media to promote fake, malware-laden AI tools in order to ride the popularity of AI and convince targets to download them.

- AI can enable polymorphic malware, which leads to many, slightly different malware samples, all with varying signatures, as a way to both overwhelm researchers and bypass detection.

- There has also been a ton of discussion about whether tools could use AI to scan for and find vulnerabilities. Recently, researcher Sean Heelan said that OpenAI’s o3 model helped find a Linux Kernel flaw (CVE-2025-37899).

How defenders are using AI in cybersecurity

Let’s look at the other side of the coin now. Defenders are reaping the benefits of AI in varying ways, including:

- Threat detection and analysis

- Compliance

- Research and development

Generative AI came with big promises (and lots of hype) around how it would improve security processes. However, where has it truly been delivering, and where are defenders still facing pain points?

Threat detection

Many security professionals had high hopes that generative AI would lead to immense advancements in threat detection. And while it has certain benefits, it’s not quite there yet.

AI is very good at drawing inference, but it’s not yet good at binary decision-making (specifically, addressing questions like “is this malicious or not?”). That’s in part because AI models aren’t trained to tell users that they don’t know the answer, but instead will give their best guess.

AI currently does offer many advantages in assisting with threat analysis, however. Google’s Sec-Gemini and Gemini 1.5 Pro are both trained to perform malware analysis, and they’re particularly helpful in mitigating the malware polymorphism challenges outlined earlier in this blog.

Security engineers and architects are finding success in using AI to help with threat modeling. For example, ZySec’s CyberPod AI is designed to help engineers walk through threat modeling scenarios with real-time insights.

AI is also primed to help analysts with context enrichment during detection and response. When analysts look at a given signal or telemetry, they currently use disparate, bespoke tools to gain all the contextual information that they need to help determine if a threat is malicious. AI can help speed up this process by adding additional context in a more efficient manner, which, ultimately, could lower the mean time-to-decision for analysts reviewing signals.

Compliance, research, and development

Businesses grappling with governance, risk, and compliance can use AI in several ways. Security compliance platform Drata is developing AI tools that it claims will automate evidence collection, which can help with compliance. Trust management platform Vanta said its AI security assessment tools will help standardize AI risk evaluation, a major benefit for businesses trying to scope out their compliance.

Figure 3: AI tools can help with compliance

Here are some other areas where AI can help with compliance:

- A key part of a successful security program isn't just putting controls in place, but also constantly measuring the efficacy of those controls. AI models can be quite useful here.

- AI tools can help security teams work through vendor diligence questionnaires as they go through risk evaluations with clients they’re trying to bring on. While human review is still mandatory here in case the AI hallucinates, this use case drastically drops the time to respond for these questionnaires.

Finally, generative AI is upping the game in threat simulation for research and development teams. While businesses previously used canned playbooks in order to simulate threats, AI tools can create a more realistic test environment with dynamic and unpredictable attack scenarios on the fly.

AI is helping defenders, but it’s also hurting them.

What is AI’s biggest benefit for security professionals? Speed.

For offensive teams, AI can help speed up operations by automating the boring stuff, accelerating research, quickly drafting scripts and exploits, and more. For defensive teams, it boosts diligence and adds contextual awareness more quickly.

But AI isn’t all that it’s hyped up to be.

AI models still have growing pains: AI struggles with binary decisions, as we highlighted above, and can struggle with fuzzy reasoning.

Perhaps the biggest AI-related challenge that we face right now is the level of trust that we have in AI models. With AI, there’s still a very real possibility of hallucinations, prompt injection, or other issues leading to erroneous responses. These can have negative impacts on businesses if AI is used without safeguards.

For employees using AI, it should be a mandatory part of the workflow to review AI responses. We can’t just put all our faith in these models and assume they’ll always be correct.

Lastly, we’ll leave you with this: AI has definite benefits, but it’s not at the point where it’s replacing people, particularly from a defensive standpoint. AI is good at helping reduce the haystack that SOC analysts need to sort through when determining malice, and it’s great for adding context to signals. But it’s not yet at the place where it can make the binary decision of whether something is good or bad.

Human oversight is still very much needed.

Like what you just read? Join us every month for Tradecraft Tuesday, our live webinar where we expose hacker techniques and talk nerdy with live demos. Next month, our Tradecraft Tuesday episode, “Hacking in Hollywood: What It Gets Wrong (and Right) about Cybersecurity,” will dive into the coolest—and straight-up cringiest—cybersecurity movie moments. Secure your spot now!